from onprem import LLM

llm = LLM(verbose=False) # default model and backend are usedOnPrem.LLM

A privacy-conscious toolkit for document intelligence — local by default, cloud-capable

OnPrem.LLM (or “OnPrem” for short) is a Python-based toolkit for applying large language models (LLMs) to sensitive, non-public data in offline or restricted environments. Inspired largely by the privateGPT project, OnPrem.LLM is designed for fully local execution, but also supports integration with a wide range of cloud LLM providers (e.g., OpenAI, Anthropic).

Key Features:

- Fully local execution with option to leverage cloud as needed. See the cheatsheet.

- Analysis pipelines for many different tasks, including information extraction, summarization, classification, question-answering, and agents.

- Support for environments with modest computational resources through modules like the SparseStore (e.g., RAG without having to store embeddings in advance).

- Easily integrate with existing tools in your local environment like Elasticsearch and Sharepoint.

- A visual workflow builder to assemble complex document analysis pipelines with a point-and-click interface.

The full documentation is here.

Quick Start

# install

!pip install onprem[chroma]

from onprem import LLM, utils

# local LLM with Ollama as backend

!ollama pull llama3.2

llm = LLM('ollama/llama3.2')

# basic prompting

result = llm.prompt('Give me a short one sentence definition of an LLM.')

# RAG

utils.download('https://www.arxiv.org/pdf/2505.07672', '/tmp/my_documents/paper.pdf')

llm.ingest('/tmp/my_documents')

result = llm.ask('What is OnPrem.LLM?')

# switch to cloud LLM using Anthropic as backend

llm = LLM("anthropic/claude-3-7-sonnet-latest")

# structured outputs

from pydantic import BaseModel, Field

class MeasuredQuantity(BaseModel):

value: str = Field(description="numerical value")

unit: str = Field(description="unit of measurement")

structured_output = llm.pydantic_prompt('He was going 35 mph.', pydantic_model=MeasuredQuantity)

print(structured_output.value) # 35

print(structured_output.unit) # mphMany LLM backends are supported (e.g., llama_cpp, transformers, Ollama, vLLM, OpenAI, Anthropic, etc.).

Latest News 🔥

- [2026/01] v0.21.0 released and now includes support for metadata-based query routing. See the query routing example here. Also included in this release: provider-implemented structured outputs (e.g., structured outputs with OpenAI, Anthropic, and AWS GovCloud Bedrock).

- [2025/12] v0.20.0 released and now includes support for asynchronous prompts. See the example.

- [2025/09] v0.19.0 released and now includes support for workflows: YAML-configured pipelines for complex document analyses. See the workflow documentation for more information.

- [2025/08] v0.18.0 released and can now be used with AWS GovCloud LLMs. See this example for more information.

- [2025/07] v0.17.0 released and now allows you to connect directly to SharePoint for search and RAG. See the example notebook on vector stores for more information.

- [2025/07] v0.16.0 released and now includes out-of-the-box support for Elasticsearch as a vector store for RAG and semantic search in addition to other vector store backends. See the example notebook on vector stores for more information.

- [2025/06] v0.15.0 released and now includes support for solving tasks with agents. See the example notebook on agents for more information.

- [2025/05] v0.14.0 released and now includes a point-and-click interface for Document Analysis: applying prompts to individual passages in uploaded documents. See the Web UI documentation for more information.

- [2025/04] v0.13.0 released and now includes streamlined support for Ollama and many cloud LLMs via special URLs (e.g.,

model_url="ollama://llama3.2",model_url="anthropic://claude-3-7-sonnet-latest"). See the cheat sheet for examples. (Note: Please useonprem>=0.13.1due to bug in v0.13.0.) - [2025/04] v0.12.0 released and now includes a re-vamped and improved Web UI with support for interactive chatting, document question-answering (RAG), and document search (both keyword searches and semantic searches). See the Web UI documentation for more information.

Install

Once you have installed PyTorch, you can install OnPrem.LLM with the following steps:

- Install llama-cpp-python (optional - see below):

- CPU:

pip install llama-cpp-python(extra steps required for Microsoft Windows) - GPU: Follow instructions below.

- CPU:

- Install OnPrem.LLM with Chroma packages:

pip install onprem[chroma]

For RAG using only a sparse vectorstore, you can install OnPrem.LLM without the extra chroma packages: pip install onprem.

Note: Installing llama-cpp-python is optional if any of the following is true:

- You are using Ollama as the LLM backend.

- You use Hugging Face Transformers (instead of llama-cpp-python) as the LLM backend by supplying the

model_idparameter when instantiating an LLM, as shown here. - You are using OnPrem.LLM with an LLM being served through an external REST API (e.g., vLLM, OpenLLM).

- You are using OnPrem.LLM with a cloud LLM (more information below).

On GPU-Accelerated Inference With llama-cpp-python

When installing llama-cpp-python with pip install llama-cpp-python, the LLM will run on your CPU. To generate answers much faster, you can run the LLM on your GPU by building llama-cpp-python based on your operating system.

- Linux:

CMAKE_ARGS="-DGGML_CUDA=on" FORCE_CMAKE=1 pip install --upgrade --force-reinstall llama-cpp-python --no-cache-dir - Mac:

CMAKE_ARGS="-DGGML_METAL=on" pip install llama-cpp-python - Windows 11: Follow the instructions here.

- Windows Subsystem for Linux (WSL2): Follow the instructions here.

For Linux and Windows, you will need an up-to-date NVIDIA driver along with the CUDA toolkit installed before running the installation commands above.

After following the instructions above, supply the n_gpu_layers=-1 parameter when instantiating an LLM to use your GPU for fast inference:

llm = LLM(n_gpu_layers=-1, ...)Quantized models with 8B parameters and below can typically run on GPUs with as little as 6GB of VRAM. If a model does not fit on your GPU (e.g., you get a “CUDA Error: Out-of-Memory” error), you can offload a subset of layers to the GPU by experimenting with different values for the n_gpu_layers parameter (e.g., n_gpu_layers=20). Setting n_gpu_layers=-1, as shown above, offloads all layers to the GPU.

See the FAQ for extra tips, if you experience issues with llama-cpp-python installation.

How to Use

Setup

Cheat Sheet

Local Models: A number of different local LLM backends are supported.

Llama-cpp:

llm = LLM(default_model="llama", n_gpu_layers=-1)Llama-cpp with selected GGUF model via URL:

# prompt templates are required for user-supplied GGUF models (see FAQ) llm = LLM(model_url='https://huggingface.co/TheBloke/zephyr-7B-beta-GGUF/resolve/main/zephyr-7b-beta.Q4_K_M.gguf', prompt_template= "<|system|>\n</s>\n<|user|>\n{prompt}</s>\n<|assistant|>", n_gpu_layers=-1)Llama-cpp with selected GGUF model via file path:

# prompt templates are required for user-supplied GGUF models (see FAQ) llm = LLM(model_url='zephyr-7b-beta.Q4_K_M.gguf', model_download_path='/path/to/folder/to/where/you/downloaded/model', prompt_template= "<|system|>\n</s>\n<|user|>\n{prompt}</s>\n<|assistant|>", n_gpu_layers=-1)Hugging Face Transformers:

llm = LLM(model_id='Qwen/Qwen2.5-0.5B-Instruct', device='cuda')Ollama:

llm = LLM(model_url="ollama://llama3.2", api_key='na')Also Ollama:

llm = LLM(model_url="ollama/llama3.2", api_key='na')Also Ollama:

llm = LLM(model_url='http://localhost:11434/v1', api_key='na', model='llama3.2')vLLM:

llm = LLM(model_url='http://localhost:8666/v1', api_key='na', model='Qwen/Qwen2.5-0.5B-Instruct')Also vLLM:

llm = LLM('hosted_vllm/served-model-name', api_base="http://localhost:8666/v1", api_key="test123")(assumesserved-model-nameparameter is supplied tovllm.entrypoints.openai.api_server).vLLM with gpt-oss (assumes

served-model-nameparameter is supplied to vLLM):# important: set max_tokens to high value due to intermediate reasoning steps that are generated llm = LLM(model_url='http://localhost:8666/v1', api_key='your_api_key', model=served_model_name, max_tokens=32000) result = llm.prompt(prompt, reasoning_effort="high")

Cloud Models: In addition to local LLMs, all cloud LLM providers supported by LiteLLM are compatible:

Anthropic Claude:

llm = LLM(model_url="anthropic/claude-3-7-sonnet-latest")OpenAI GPT-4o:

llm = LLM(model_url="openai/gpt-4o")AWS GovCloud Bedrock (assumes AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY are set as environment variables)

from onprem import LLM inference_arn = "YOUR INFERENCE ARN" endpoint_url = "YOUR ENDPOINT URL" region_name = "us-gov-east-1" # replace as necessary # set up LLM connection to Bedrock on AWS GovCloud llm = LLM( f"govcloud-bedrock://{inference_arn}", region_name=region_name, endpoint_url=endpoint_url) response = llm.prompt("Write a haiku about the moon.")

The instantiations above are described in more detail below.

GGUF Models and Llama.cpp

The default LLM backend is llama-cpp-python, and the default model is currently a 7B-parameter model called Zephyr-7B-beta, which is automatically downloaded and used. Llama.cpp run models in GGUF format. The two other default models are llama and mistral. For instance, if default_model='llama' is supplied, then a Llama-3.1-8B-Instsruct model is automatically downloaded and used:

# Llama 3.1 is downloaded here and the correct prompt template for Llama-3.1 is automatically configured and used

llm = LLM(default_model='llama')Choosing Your Own Models: Of course, you can also easily supply the URL or path to an LLM of your choosing to LLM (see the FAQ for an example).

Supplying Extra Parameters: Any extra parameters supplied to LLM are forwarded directly to llama-cpp-python, the default LLM backend.

Changing the Default LLM Backend

If default_engine="transformers" is supplied to LLM, Hugging Face transformers is used as the LLM backend. Extra parameters to LLM (e.g., ‘device=’cuda’) are forwarded diretly totransformers.pipeline. If supplying amodel_id` parameter, the default LLM backend is automatically changed to Hugging Face transformers.

# LLama-3.1 model quantized using AWQ is downloaded and run with Hugging Face transformers (requires GPU)

llm = LLM(default_model='llama', default_engine='transformers')

# Using a custom model with Hugging Face Transformers

llm = LLM(model_id='Qwen/Qwen2.5-0.5B-Instruct', device_map='cpu')See here for more information about using Hugging Face transformers as the LLM backend.

You can also connect to Ollama, local LLM APIs (e.g., vLLM), and cloud LLMs.

# connecting to an LLM served by Ollama

lm = LLM(model_url='ollama/llama3.2')

# connecting to an LLM served through vLLM (set API key as needed)

llm = LLM(model_url='http://localhost:8000/v1', api_key='token-abc123', model='Qwen/Qwen2.5-0.5B-Instruct')`

# connecting to a cloud-backed LLM (e.g., OpenAI, Anthropic).

llm = LLM(model_url="openai/gpt-4o-mini") # OpenAI

llm = LLM(model_url="anthropic/claude-3-7-sonnet-20250219") # AnthropicOnPrem.LLM suppports any provider and model supported by the LiteLLM package.

See here for more information on local LLM APIs.

More information on using OpenAI models specifically with OnPrem.LLM is here.

Supplying Parameters to the LLM Backend

Extra parameters supplied to LLM and LLM.prompt are passed directly to the LLM backend. Parameter names will vary depending on the backend you chose.

For instance, with the default llama-cpp backend, the default context window size (n_ctx) is set to 3900 and the default output size (max_tokens) is set 512. Both are configurable parameters to LLM. Increase if you have larger prompts or need longer outputs. Other parameters (e.g., api_key, device_map, etc.) can be supplied directly to LLM and will be routed to the LLM backend or API (e.g., llama-cpp-python, Hugging Face transformers, vLLM, OpenAI, etc.). The max_tokens parameter can also be adjusted on-the-fly by supplying it to LLM.prompt.

On the other hand, for Ollama models, context window and output size are controlled by num_ctx and num_predict, respectively.

With the Hugging Face transformers, setting the context window size is not needed, but the output size is controlled by the max_new_tokens parameter to LLM.prompt.

Send Prompts to the LLM to Solve Problems

This is an example of few-shot prompting, where we provide an example of what we want the LLM to do.

prompt = """Extract the names of people in the supplied sentences.

Separate names with commas and place on a single line.

# Example 1:

Sentence: James Gandolfini and Paul Newman were great actors.

People:

James Gandolfini, Paul Newman

# Example 2:

Sentence:

I like Cillian Murphy's acting. Florence Pugh is great, too.

People:"""

saved_output = llm.prompt(prompt, stop=['\n\n'])

Cillian Murphy, Florence PughAdditional prompt examples are shown here.

Talk to Your Documents

Answers are generated from the content of your documents (i.e., retrieval augmented generation or RAG). Here, we will use GPU offloading to speed up answer generation using the default model. However, the Zephyr-7B model may perform even better, responds faster, and is used in our RAG example notebook.

from onprem import LLM

llm = LLM(n_gpu_layers=-1, store_type='sparse', verbose=False)llama_new_context_with_model: n_ctx_per_seq (3904) < n_ctx_train (32768) -- the full capacity of the model will not be utilizedThe default embedding model is: sentence-transformers/all-MiniLM-L6-v2. You can change it by supplying the embedding_model_name to LLM.

Step 1: Ingest the Documents into a Vector Database

As of v0.10.0, you have the option of storing documents in either a dense vector store (i.e., Chroma) or a sparse vector store (i.e., a built-in keyword search index). Sparse vector stores sacrifice a small amount of inference speed for significant improvements in ingestion speed (useful for larger document sets) and also assume answer sources will include at least one word from the question. To select the store type, supply either store_type="dense" or store_type="sparse" when creating the LLM. As you can see above, we use a sparse vector store here.

llm.ingest("./tests/sample_data")Creating new vectorstore at /home/amaiya/onprem_data/vectordb/sparse

Loading documents from ./tests/sample_dataLoading new documents: 100%|██████████████████████| 6/6 [00:09<00:00, 1.51s/it]

Processing and chunking 43 new documents: 100%|██████████████████████████████████████████████████████████████████| 1/1 [00:00<00:00, 116.11it/s]Split into 354 chunks of text (max. 500 chars each for text; max. 2000 chars for tables)100%|███████████████████████████████████████████████████████████████████████████████████████████████████████| 354/354 [00:00<00:00, 2548.70it/s]Ingestion complete! You can now query your documents using the LLM.ask or LLM.chat methodsThe default chunk_size is set quite low at 1000 characters. You increase by supplying chunk_size to llm.ingest. You can customize the ingestion process even further by accessing the underlying vector store directly, as illustrated in the advanced RAG example.

Step 2: Answer Questions About the Documents

question = """What is ktrain?"""

result = llm.ask(question) ktrain is a low-code machine learning platform. It provides out-of-the-box support for training models on various types of data such as text, vision, graph, and tabular.The sources used by the model to generate the answer are stored in result['source_documents']. You can adjust the number of sources (i.e., chunks) considered by suppyling the limit parameter to llm.ask.

print("\nSources:\n")

for i, document in enumerate(result["source_documents"]):

print(f"\n{i+1}.> " + document.metadata["source"] + ":")

print(document.page_content)

Sources:

1.> /home/amaiya/projects/ghub/onprem/nbs/tests/sample_data/ktrain_paper/ktrain_paper.pdf:

transferred to, and executed on new data in a production environment.

ktrain is a Python library for machine learning with the goal of presenting a simple,

unified interface to easily perform the above steps regardless of the type of data (e.g., text

vs. images vs. graphs). Moreover, each of the three steps above can be accomplished in

©2022 Arun S. Maiya.

License: CC-BY 4.0, see https://creativecommons.org/licenses/by/4.0/. Attribution requirements are

2.> /home/amaiya/projects/ghub/onprem/nbs/tests/sample_data/ktrain_paper/ktrain_paper.pdf:

custom models and data formats, as well. Inspired by other low-code (and no-code) open-

source ML libraries such as fastai (Howard and Gugger, 2020) and ludwig (Molino et al.,

2019), ktrain is intended to help further democratize machine learning by enabling begin-

ners and domain experts with minimal programming or data science experience to build

sophisticated machine learning models with minimal coding. It is also a useful toolbox for

3.> /home/amaiya/projects/ghub/onprem/nbs/tests/sample_data/ktrain_paper/ktrain_paper.pdf:

Apache license, and available on GitHub at: https://github.com/amaiya/ktrain.

2. Building Models

Supervised learning tasks in ktrain follow a standard, easy-to-use template.

STEP 1: Load and Preprocess Data. This step involves loading data from different

sources and preprocessing it in a way that is expected by the model. In the case of text,

this may involve language-specific preprocessing (e.g., tokenization). In the case of images,

4.> /home/amaiya/projects/ghub/onprem/nbs/tests/sample_data/ktrain_paper/ktrain_paper.pdf:

AutoKeras (Jin et al., 2019) and AutoGluon (Erickson et al., 2020) lack some key “pre-

canned” features in ktrain, which has the strongest support for natural language processing

and graph-based data. Support for additional features is planned for the future.

5. Conclusion

This work presented ktrain, a low-code platform for machine learning. ktrain currently in-

cludes out-of-the-box support for training models on text, vision, graph, and tabularExtract Text from Documents

The load_single_document function can extract text from a range of different document formats (e.g., PDFs, Microsoft PowerPoint, Microsoft Word, etc.). It is automatically invoked when calling LLM.ingest. Extracted text is represented as LangChain Document objects, where Document.page_content stores the extracted text and Document.metadata stores any extracted document metadata.

For PDFs, in particular, a number of different options are available depending on your use case.

Fast PDF Extraction (default)

- Pro: Fast

- Con: Does not infer/retain structure of tables in PDF documents

from onprem.ingest import load_single_document

docs = load_single_document('tests/sample_data/ktrain_paper/ktrain_paper.pdf')

docs[0].metadata{'source': '/home/amaiya/projects/ghub/onprem/nbs/sample_data/1/ktrain_paper.pdf',

'file_path': '/home/amaiya/projects/ghub/onprem/nbs/sample_data/1/ktrain_paper.pdf',

'page': 0,

'total_pages': 9,

'format': 'PDF 1.4',

'title': '',

'author': '',

'subject': '',

'keywords': '',

'creator': 'LaTeX with hyperref',

'producer': 'dvips + GPL Ghostscript GIT PRERELEASE 9.22',

'creationDate': "D:20220406214054-04'00'",

'modDate': "D:20220406214054-04'00'",

'trapped': ''}Automatic OCR of PDFs

- Pro: Automatically extracts text from scanned PDFs

- Con: Slow

The load_single_document function will automatically OCR PDFs that require it (i.e., PDFs that are scanned hard-copies of documents). If a document is OCR’ed during extraction, the metadata['ocr'] field will be populated with True.

docs = load_single_document('tests/sample_data/ocr_document/lynn1975.pdf')

docs[0].metadata{'source': '/home/amaiya/projects/ghub/onprem/nbs/sample_data/4/lynn1975.pdf',

'ocr': True}Markdown Conversion in PDFs

- Pro: Better chunking for QA

- Con: Slower than default PDF extraction

The load_single_document function can convert PDFs to Markdown instead of plain text by supplying the pdf_markdown=True as an argument:

docs = load_single_document('your_pdf_document.pdf',

pdf_markdown=True)Converting to Markdown can facilitate downstream tasks like question-answering. For instance, when supplying pdf_markdown=True to LLM.ingest, documents are chunked in a Markdown-aware fashion (e.g., the abstract of a research paper tends to be kept together into a single chunk instead of being split up). Note that Markdown will not be extracted if the document requires OCR.

Inferring Table Structure in PDFs

- Pro: Makes it easier for LLMs to analyze information in tables

- Con: Slower than default PDF extraction

When supplying infer_table_structure=True to either load_single_document or LLM.ingest, tables are inferred and extracted from PDFs using a TableTransformer model. Tables are represented as Markdown (or HTML if Markdown conversion is not possible).

docs = load_single_document('your_pdf_document.pdf',

infer_table_structure=True)Parsing Extracted Text Into Sentences or Paragraphs

For some analyses (e.g., using prompts for information extraction), it may be useful to parse the text extracted from documents into individual sentences or paragraphs. This can be accomplished using the segment function:

from onprem.ingest import load_single_document

from onprem.utils import segment

text = load_single_document('tests/sample_data/sotu/state_of_the_union.txt')[0].page_contentsegment(text, unit='paragraph')[0]'Madam Speaker, Madam Vice President, our First Lady and Second Gentleman. Members of Congress and the Cabinet. Justices of the Supreme Court. My fellow Americans.'segment(text, unit='sentence')[0]'Madam Speaker, Madam Vice President, our First Lady and Second Gentleman.'Summarization Pipeline

Summarize your raw documents (e.g., PDFs, MS Word) with an LLM.

Map-Reduce Summarization

Summarize each chunk in a document and then generate a single summary from the individual summaries.

from onprem import LLM

llm = LLM(n_gpu_layers=-1, verbose=False, mute_stream=True) # disabling viewing of intermediate summarization prompts/inferencesfrom onprem.pipelines import Summarizer

summ = Summarizer(llm)

resp = summ.summarize('tests/sample_data/ktrain_paper/ktrain_paper.pdf', max_chunks_to_use=5) # omit max_chunks_to_use parameter to consider entire document

print(resp['output_text']) Ktrain is an open-source machine learning library that offers a unified interface for various machine learning tasks. The library supports both supervised and non-supervised machine learning, and includes methods for training models, evaluating models, making predictions on new data, and providing explanations for model decisions. Additionally, the library integrates with various explainable AI libraries such as shap, eli5 with lime, and others to provide more interpretable models.Concept-Focused Summarization

Summarize a large document with respect to a particular concept of interest.

from onprem import LLM

from onprem.pipelines import Summarizerllm = LLM(default_model='zephyr', n_gpu_layers=-1, verbose=False, temperature=0)

summ = Summarizer(llm)

summary, sources = summ.summarize_by_concept('tests/sample_data/ktrain_paper/ktrain_paper.pdf', concept_description="question answering")

The context provided describes the implementation of an open-domain question-answering system using ktrain, a low-code library for augmented machine learning. The system follows three main steps: indexing documents into a search engine, locating documents containing words in the question, and extracting candidate answers from those documents using a BERT model pretrained on the SQuAD dataset. Confidence scores are used to sort and prune candidate answers before returning results. The entire workflow can be implemented with only three lines of code using ktrain's SimpleQA module. This system allows for the submission of natural language questions and receives exact answers, as demonstrated in the provided example. Overall, the context highlights the ease and accessibility of building sophisticated machine learning models, including open-domain question-answering systems, through ktrain's low-code interface.Information Extraction Pipeline

Extract information from raw documents (e.g., PDFs, MS Word documents) with an LLM.

from onprem import LLM

from onprem.pipelines import Extractor

# Notice that we're using a cloud-based, off-premises model here! See "OpenAI" section below.

llm = LLM(model_url='openai://gpt-3.5-turbo', verbose=False, mute_stream=True, temperature=0)

extractor = Extractor(llm)

prompt = """Extract the names of research institutions (e.g., universities, research labs, corporations, etc.)

from the following sentence delimited by three backticks. If there are no organizations, return NA.

If there are multiple organizations, separate them with commas.

```{text}```

"""

df = extractor.apply(prompt, fpath='tests/sample_data/ktrain_paper/ktrain_paper.pdf', pdf_pages=[1], stop=['\n'])

df.loc[df['Extractions'] != 'NA'].Extractions[0]/home/amaiya/projects/ghub/onprem/onprem/core.py:159: UserWarning: The model you supplied is gpt-3.5-turbo, an external service (i.e., not on-premises). Use with caution, as your data and prompts will be sent externally.

warnings.warn(f'The model you supplied is {self.model_name}, an external service (i.e., not on-premises). '+\'Institute for Defense Analyses'Few-Shot Classification

Make accurate text classification predictions using only a tiny number of labeled examples.

# create classifier

from onprem.pipelines import FewShotClassifier

clf = FewShotClassifier(use_smaller=True)

# Fetching data

from sklearn.datasets import fetch_20newsgroups

import pandas as pd

import numpy as np

classes = ["soc.religion.christian", "sci.space"]

newsgroups = fetch_20newsgroups(subset="all", categories=classes)

corpus, group_labels = np.array(newsgroups.data), np.array(newsgroups.target_names)[newsgroups.target]

# Wrangling data into a dataframe and selecting training examples

data = pd.DataFrame({"text": corpus, "label": group_labels})

train_df = data.groupby("label").sample(5)

test_df = data.drop(index=train_df.index)

# X_sample only contains 5 examples of each class!

X_sample, y_sample = train_df['text'].values, train_df['label'].values

# test set

X_test, y_test = test_df['text'].values, test_df['label'].values

# train

clf.train(X_sample, y_sample, max_steps=20)

# evaluate

print(clf.evaluate(X_test, y_test, print_report=False)['accuracy'])

#output: 0.98

# make predictions

clf.predict(['Elon Musk likes launching satellites.']).tolist()[0]

#output: sci.spaceTIP: You can also easily train a wide range of traditional text classification models using both Hugging Face transformers and scikit-learn as backends.

Using Hugging Face Transformers Instead of Llama.cpp

By default, the LLM backend employed by OnPrem.LLM is llama-cpp-python, which requires models in GGUF format. As of v0.5.0, it is now possible to use Hugging Face transformers as the LLM backend instead. This is accomplished by using the model_id parameter (instead of supplying a model_url argument). In the example below, we run the Llama-3.1-8B model.

# llama-cpp-python does NOT need to be installed when using model_id parameter

llm = LLM(model_id="hugging-quants/Meta-Llama-3.1-8B-Instruct-AWQ-INT4", device_map='cuda')This allows you to more easily use any model on the Hugging Face hub in SafeTensors format provided it can be loaded with the Hugging Face transformers.pipeline. Note that, when using the model_id parameter, the prompt_template is set automatically by transformers.

The Llama-3.1 model loaded above was quantized using AWQ, which allows the model to fit onto smaller GPUs (e.g., laptop GPUs with 6GB of VRAM) similar to the default GGUF format. AWQ models will require the autoawq package to be installed: pip install autoawq (AWQ only supports Linux system, including Windows Subsystem for Linux). If you do need to load a model that is not quantized, you can supply a quantization configuration at load time (known as “inflight quantization”). In the following example, we load an unquantized Zephyr-7B-beta model that will be quantized during loading to fit on GPUs with as little as 6GB of VRAM:

from transformers import BitsAndBytesConfig

quantization_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype="float16",

bnb_4bit_use_double_quant=True,

)

llm = LLM(model_id="HuggingFaceH4/zephyr-7b-beta", device_map='cuda',

model_kwargs={"quantization_config":quantization_config})When supplying a quantization_config, the bitsandbytes library, a lightweight Python wrapper around CUDA custom functions, in particular 8-bit optimizers, matrix multiplication (LLM.int8()), and 8 & 4-bit quantization functions, is used. There are ongoing efforts by the bitsandbytes team to support multiple backends in addition to CUDA. If you receive errors related to bitsandbytes, please refer to the bitsandbytes documentation.

Structured and Guided Outputs

LLMs do not always listen to instructions properly. Structured outputs for LLMs are a feature ensuring model responses follow a strict, user-defined format (like JSON or XML schema) instead of free-form text, making outputs predictable, machine-readable, and easily integrable into applications.

Natively Supported Structured Outputs

A number of LLM services (e.g., vLLM, OpenAI, Anthropic Claude, AWS GovCloud Bedrock) include native support for producing structured outputs. To take advantage of this capability when it exists, you can supply a Pydantic model representing the desired output format to the response_format parameter ofLLM.prompt.

Structured outputs for LLMs are a feature ensuring model responses follow a strict, user-defined format (like JSON or XML schema) instead of free-form text, making outputs predictable, machine-readable, and easily integrable into applications.

Anthropic or OpenAI

from onprem import LLM

from pydantic import BaseModel

class ContactInfo(BaseModel):

name: str

email: str

plan_interest: str

demo_requested: bool

# Create LLM instance for Claude

llm = LLM("anthropic/claude-3-7-sonnet-latest")

# Use structured output - this should automatically use Claude's native API

result = llm.prompt(

"Extract info from: John Smith (john@example.com) is interested in our Enterprise plan and wants to schedule a demo for next Tuesday at 2pm.",

response_format=ContactInfo

)

print(f"Name: {result.name}")

print(f"Email: {result.email}")

print(f"Plan: {result.plan_interest}")

print(f"Demo: {result.demo_requested}")The above approach using the response_format parameter works with both Anthropic and OpenAI as LLM backends.

AWS GovCloud Bedrock

A structured output example using AWS GovCloud Bedrock is shown here.

VLLM

For vLLM, you can generate structured outputs using documented extra parameters like extra_body argument as illustrated below:

from onprem import LLM

llm = LLM(model_url='http://localhost:8666/v1', api_key='test123', model='MyGPT')

# classification-based structured outputs

result = llm.prompt('Classify this sentiment: vLLM is wonderful!',

extra_body={"structured_outputs": {"choice": ["positive", "negative"]}})

# OUTPUT: positive

# JSON-based structured outputs

from pydantic import BaseModel, Field

class MeasuredQuantity(BaseModel):

value: str = Field(description="numerical value - number only")

unit: str = Field(description="unit of measurement")

response_format = {"type": "json_schema",

"json_schema": {

"name": MeasuredQuantity.__name__.lower(),

"schema": MeasuredQuantity.model_json_schema()}}

result = llm.prompt('Extract unit and value from the following: He was going 35 mph.', response_format=response_format)

# OUTPUT: { "value": "35", "unit": "mph" }

# RegEx-based strucured outputs

result = llm.prompt(

"Generate an example email address for Alan Turing, who works in Enigma. End in "

".com and new line.",

extra_body={"structured_outputs": {"regex": r"\w+@\w+\.com\n"}, "stop": ["\n"]},

)

# OUTPUT: Alan_Turing@enigma.comOllama

from pydantic import BaseModel

class Pet(BaseModel):

name: str

animal: str

age: int

color: str | None

favorite_toy: str | None

class PetList(BaseModel):

pets: list[Pet]

llm = LLM('ollama/llama3.1')

result = llm.prompt('I have two cats named Luna and Loki...', format=PetList.model_json_schema())When using an LLM backend that does not natively support structured outputs, supplying a Pydantic model via the response_format parameter to LLM.prompt should result in an automatic fall back to a prompt-based approach to structured outputs as described next.

Tip: When using natively-supported structured outputs, it is important to include an actual instruction in the prompt (e.g., “Classify this sentiment”, “Extract info from”, etc.). With prompt-based structured outputs (described below), the instruction can often be omitted.

Prompt-Based Structured Outputs

The LLM.pydantic_prompt method also allows you to specify the desired structure of the LLM’s output as a Pydantic model. Internally, LLM.pydantic_prompt wraps the user-supplied prompt within a larger prompt telling the LLM to output results in a specific JSON format. It is sometimes less efficient/reliable than aforementioned native methods, but is more generally applicable to any LLM. Since calling LLM.prompt with the response_format parameter will automatically invoke LLM.pydantic_prompt when necessary, you will typically not have to call LLM.pydantic_prompt directly.

from pydantic import BaseModel, Field

class Joke(BaseModel):

setup: str = Field(description="question to set up a joke")

punchline: str = Field(description="answer to resolve the joke")

from onprem import LLM

llm = LLM(default_model='llama', verbose=False)

structured_output = llm.pydantic_prompt('Tell me a joke.', pydantic_model=Joke)llama_new_context_with_model: n_ctx_per_seq (3904) < n_ctx_train (131072) -- the full capacity of the model will not be utilized{

"setup": "Why couldn't the bicycle stand alone?",

"punchline": "Because it was two-tired!"

}The output is a Pydantic object instead of a string:

structured_outputJoke(setup="Why couldn't the bicycle stand alone?", punchline='Because it was two-tired!')print(structured_output.setup)

print()

print(structured_output.punchline)Why couldn't the bicycle stand alone?

Because it was two-tired!You can also use OnPrem.LLM with the Guidance package to guide the LLM to generate outputs based on your conditions and constraints. We’ll show a couple of examples here, but see our documentation on guided prompts for more information.

from onprem import LLM

llm = LLM(n_gpu_layers=-1, verbose=False)

from onprem.pipelines.guider import Guider

guider = Guider(llm)With the Guider, you can use use Regular Expressions to control LLM generation:

prompt = f"""Question: Luke has ten balls. He gives three to his brother. How many balls does he have left?

Answer: """ + gen(name='answer', regex='\d+')

guider.prompt(prompt, echo=False){'answer': '7'}prompt = '19, 18,' + gen(name='output', max_tokens=50, stop_regex='[^\d]7[^\d]')

guider.prompt(prompt)19, 18, 17, 16, 15, 14, 13, 12, 11, 10, 9, 8,

{'output': ' 17, 16, 15, 14, 13, 12, 11, 10, 9, 8,'}See the documentation for more examples of how to use Guidance with OnPrem.LLM.

Solving Tasks With Agents

from onprem import LLM

from onprem.pipelines import Agent

llm = LLM('openai/gpt-4o-mini', mute_stream=True)

agent = Agent(llm)

agent.add_webview_tool()

answer = agent.run("What is the highest level of education of the person listed on this page: https://arun.maiya.net?")

# ANSWER: Ph.D. in Computer ScienceSee the example notebook on agents for more information

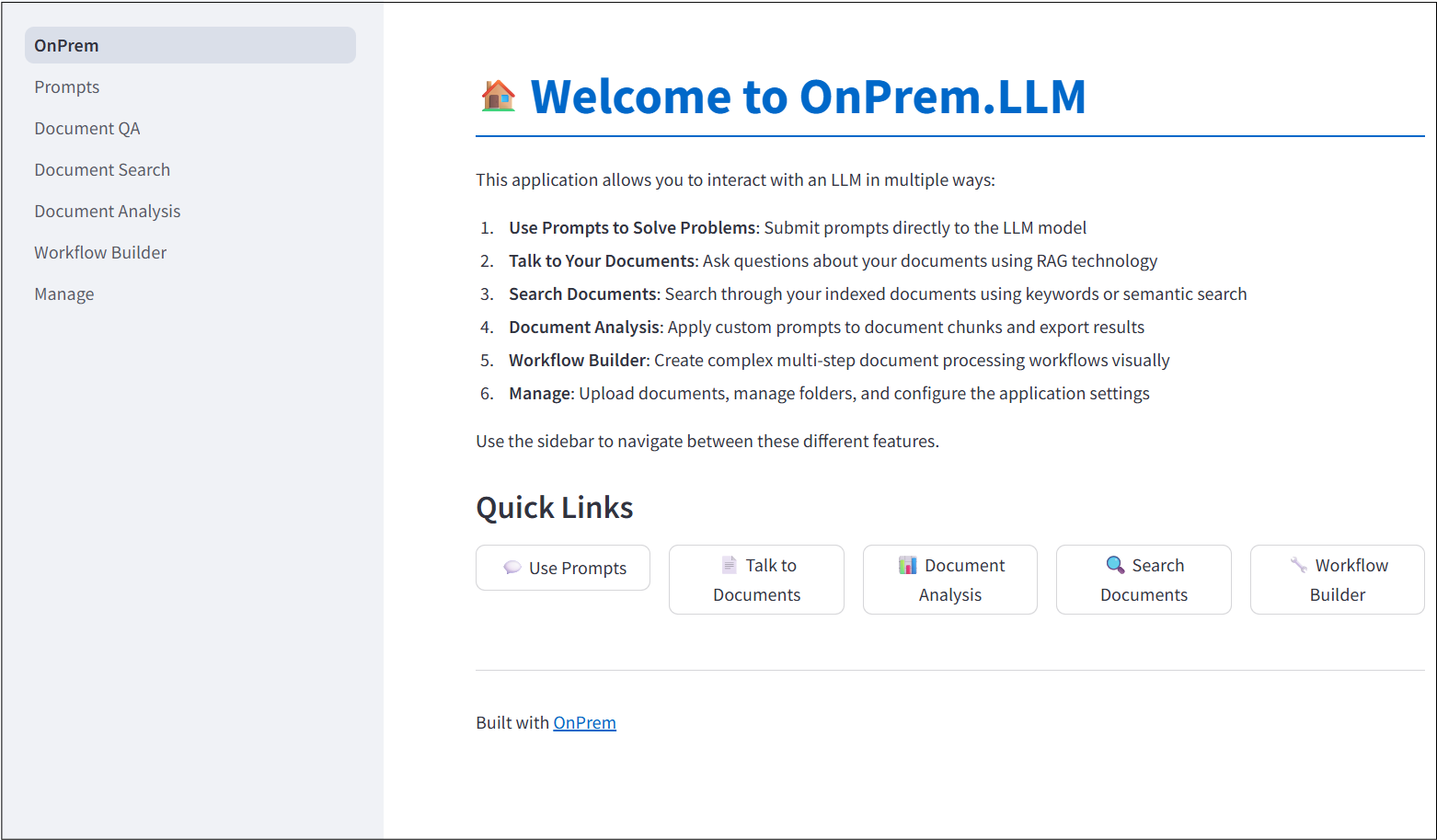

Built-In Web App

OnPrem.LLM includes a built-in Web app to access the LLM. To start it, run the following command after installation:

onprem --port 8000Then, enter localhost:8000 (or <domain_name>:8000 if running on remote server) in a Web browser to access the application:

For more information, see the corresponding documentation.

Examples

The documentation includes many examples, including:

FAQ

How do I use other models with OnPrem.LLM?

You can supply any model of your choice using the

model_urlandmodel_idparameters toLLM(see cheat sheet above).Here, we will go into detail on how to supply a custom GGUF model using the llma.cpp backend.

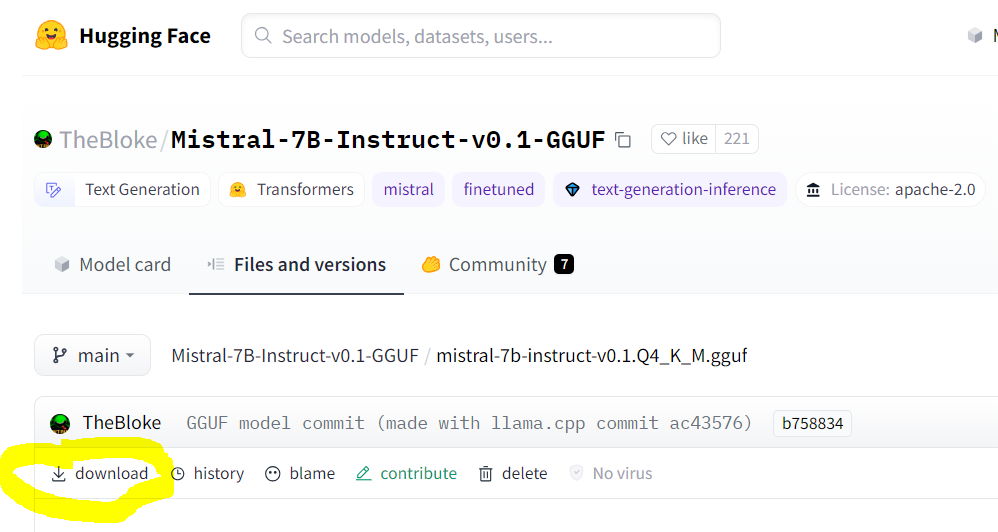

You can find llama.cpp-supported models with

GGUFin the file name on huggingface.co.Make sure you are pointing to the URL of the actual GGUF model file, which is the “download” link on the model’s page. An example for Mistral-7B is shown below:

When using the llama.cpp backend, GGUF models have specific prompt formats that need to supplied to

LLM. For instance, the prompt template required for Zephyr-7B, as described on the model’s page, is:<|system|>\n</s>\n<|user|>\n{prompt}</s>\n<|assistant|>So, to use the Zephyr-7B model, you must supply the

prompt_templateargument to theLLMconstructor (or specify it in thewebapp.ymlconfiguration for the Web app).# how to use Zephyr-7B with OnPrem.LLM llm = LLM(model_url='https://huggingface.co/TheBloke/zephyr-7B-beta-GGUF/resolve/main/zephyr-7b-beta.Q4_K_M.gguf', prompt_template = "<|system|>\n</s>\n<|user|>\n{prompt}</s>\n<|assistant|>", n_gpu_layers=33) llm.prompt("List three cute names for a cat.")Prompt templates are not required for any other LLM backend (e.g., when using Ollama as backend or when using

model_idparameter for transformers models). Prompt templates are also not required if using any of the default models.When installing

onprem, I’m getting “build” errors related tollama-cpp-python(orchroma-hnswlib) on Windows/Mac/Linux?See this LangChain documentation on LLama.cpp for help on installing the

llama-cpp-pythonpackage for your system. Additional tips for different operating systems are shown below:For Linux systems like Ubuntu, try this:

sudo apt-get install build-essential g++ clang. Other tips are here.For Windows systems, please try following these instructions. We recommend you use Windows Subsystem for Linux (WSL) instead of using Microsoft Windows directly. If you do need to use Microsoft Window directly, be sure to install the Microsoft C++ Build Tools and make sure the Desktop development with C++ is selected.

For Macs, try following these tips.

There are also various other tips for each of the above OSes in this privateGPT repo thread. Of course, you can also easily use OnPrem.LLM on Google Colab.

Finally, if you still can’t overcome issues with building

llama-cpp-python, you can try installing the pre-built wheel file for your system:Example:

pip install llama-cpp-python==0.2.90 --extra-index-url https://abetlen.github.io/llama-cpp-python/whl/cpuTip: There are pre-built wheel files for

chroma-hnswlib, as well. If runningpip install onpremfails on buildingchroma-hnswlib, it may be because a pre-built wheel doesn’t yet exist for the version of Python you’re using (in which case you can try downgrading Python).I’m behind a corporate firewall and am receiving an SSL error when trying to download the model?

Try this:

from onprem import LLM LLM.download_model(url, ssl_verify=False)You can download the embedding model (used by

LLM.ingestandLLM.ask) as follows:wget --no-check-certificate https://public.ukp.informatik.tu-darmstadt.de/reimers/sentence-transformers/v0.2/all-MiniLM-L6-v2.zipSupply the unzipped folder name as the

embedding_model_nameargument toLLM.If you’re getting SSL errors when even running

pip install, try this:pip install –-trusted-host pypi.org –-trusted-host files.pythonhosted.org pip_system_certsHow do I use this on a machine with no internet access?

Use the

LLM.download_modelmethod to download the model files to<your_home_directory>/onprem_dataand transfer them to the same location on the air-gapped machine.For the

ingestandaskmethods, you will need to also download and transfer the embedding model files:from sentence_transformers import SentenceTransformer model = SentenceTransformer('sentence-transformers/all-MiniLM-L6-v2') model.save('/some/folder')Copy the

some/folderfolder to the air-gapped machine and supply the path toLLMvia theembedding_model_nameparameter.My model is not loading when I call

llm = LLM(...)?This can happen if the model file is corrupt (in which case you should delete from

<home directory>/onprem_dataand re-download). It can also happen if the version ofllama-cpp-pythonneeds to be upgraded to the latest.I’m getting an

“Illegal instruction (core dumped)error when instantiating alangchain.llms.Llamacpporonprem.LLMobject?Your CPU may not support instructions that

cmakeis using for one reason or another (e.g., due to Hyper-V in VirtualBox settings). You can try turning them off when building and installingllama-cpp-python:# example CMAKE_ARGS="-DGGML_CUDA=ON -DGGML_AVX2=OFF -DGGML_AVX=OFF -DGGML_F16C=OFF -DGGML_FMA=OFF" FORCE_CMAKE=1 pip install --force-reinstall llama-cpp-python --no-cache-dirHow can I speed up

LLM.ingest?By default, a GPU, if available, will be used to compute embeddings, so ensure PyTorch is installed with GPU support. You can explicitly control the device used for computing embeddings with the

embedding_model_kwargsargument.from onprem import LLM llm = LLM(embedding_model_kwargs={'device':'cuda'})You can also supply

store_type="sparse"toLLMto use a sparse vector store, which sacrifices a small amount of inference speed (LLM.ask) for significant speed ups during ingestion (LLM.ingest).from onprem import LLM llm = LLM(store_type="sparse")Note, however, that, unlike dense vector stores, sparse vector stores assume answer sources will contain at least one word in common with the question.

How to Cite

Please cite the following paper when using OnPrem.LLM:

@article{maiya2025generativeaiffrdcs,

title={Generative AI for FFRDCs},

author={Arun S. Maiya},

year={2025},

eprint={2509.21040},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2509.21040},

}